New smart glasses tell you what to say on dates using GPT-4: What does this say about our sense of self? | AI seduction

I:

INTRODUCTION – AI SEDUCTION

Less

than five months ago, I introduced in one of my articles1 the idea

of AI-aided seduction:

“How

far can technology get when it comes to online seduction? What if ChatGPT, or a

similar bot, can make an impression of such a realistic social interaction that

you could never trust any written interaction with strangers unless you see

their webcam? We know that it is already possible, but only for short-term

social interaction, but what if these bots evolve enough that they will be able

to hold online friendships for months or years upon end, remembering all the

details of the past conversations?

This

is why I make a prediction that in 5-10 years, it will become impossible to hit

on people by chatting them up on Tinder, Facebook, Instagram or any social

media that functions by text. The fear is not that the person you are talking

to is a bot – the fear will be that they will be a human using a bot.

Imagine this: I see a hot girl on Instagram, I input into ChatGPT a few

personal details about myself and about herself, and then I tell ChatGPT to go

seduce her. Then I, the real human, will simply see the conversation unfold

under my eyes while ChatGPT does all the work.

But

what if she does the same? What if she’s also a human using a bot? She will see

me and also use ChatGPT to respond to my conversation in the same way.

I

will be behind my screen, watching the conversation unfold, and thinking: “Hah,

she actually fell for it! She’s actually texting me while I’m letting the AI do

all the work!”. And she will be behind her own screen, watching the

conversation unfold and thinking: “Hah, he actually fell for it? He’s

actually texting me while I’m letting the AI do all the work!”.

But,

behind this illusion, what if we both fall in love, and I actually fall in love

with the way “she” texts me, not knowing that it’s a robot talking to “me”, and

she falls in love with the way “I” text her, not knowing that it’s a robot

talking to “her”? And we both fall in love with “each other” without ever

exchanging a word, when it’s just two robots doing all the talking? The future

is now. And the more terrifying question is – how much of this hypothetical

scenario is simply the more upfront and “extreme” version of what we were

already doing anyway in real life?”

I

gave it “5-10 years”, but not even five months passed and something even more

radical has been created: NEW SMART GLASSES TELL YOU WHAT TO SAY ON DATES USING GPT-42.

Here

is what the authors of the article have to say about the subject:

A

team of crafty student researchers at Stanford University have come up with a

pair of smart glasses that can display the output of OpenAI's GPT-4 large

language model — potentially giving you a leg up during the next job interview,

or even coaching you during your next date.

The

device, dubbed rizzGPT, offers its wearer "real-time Charisma as a

Service" (CaaS) and "listens to your conversation and tells you

exactly what to say next”. "Say goodbye to awkward dates and job

interviews," Chiang wrote.

The

glasses were made using a monocle-like device that can be snapped onto

practically any glasses, built and donated by Brilliant Labs. It features a

camera, microphone, and a high-resolution display that can output text

generated by GPT-4.

OpenAI's

speech recognition software, Whisper, allows the glasses to feed speech

directly to the chatbot, which can generate answers in a matter of seconds to

its wearer. The smart glasses' generated text, which was displayed on the

device's tiny screen and read aloud by Stanford's Varun Shenoy, did feel a

little stunted.

The

linked article includes a video of how the glasses currently work in the

context of a staged job interview. Of

course, for the moment, the glasses have a delay of a few seconds, and the AI

isn’t very “human-like” so it would be obvious if someone were to use it. But

technology is evolving really quickly – how long until AI can “pass as human”?

Likely not a lot of time. This raises serious questions regarding the nature of

identity, subjectivity, culture and social norms, seduction, manipulation and our

entire “sense of self”. Let’s try to answer a few of them.

II:

CYBERNETIC FEEDBACK LOOPS

I want to consider this

scenario: the two people are on a date, both of them are using this AI

technology, but neither of them is aware that the other person is using them.

Both of them, at an individual level, consider the possibility that the other person

may or may not be using a similar pair of glasses.

Let’s make a few assumptions

in order to simplify our analysis:

1. The

glasses become contact lenses. This is to exclude scenarios in which humans become

paranoid of everyone wearing glasses such that it becomes habit to ask the

other person to switch glasses at the beginning of every social encounter or

something like that. The moment they are contact lenses, there is no way to check.

2. These

smart contact lenses give you instant replies, no need to wait for the 3-5

second delay as it is in the current version.

3. Both

people are capable of reading very fast from their prompt, such that it is not

clear that they are reading from a prompt.

What is going on here,

exactly? Both people are simply reciting whatever their AI tells them, without being

aware that the other person is doing exactly the same. Hence, we are dealing

with two AIs trying to seduce each other. The two people may form some sort of

attachment, or even fall in love, when they never actually “exchanged a word”

per se – the AI talked for them, and the act of seduction was “outsourced”.

One can’t help but be

reminded of Slavoj Zizek’s ideal sex here3: she brings her vibrating

dildo, I bring in my vibrating fleshlight, she puts her dildo into my

fleshlight, we plug them in, and the machines are buzzing in the background and

now we’re free… free to watch a deepfake porn video in which we have edited the

male actor’s face with my face and the female actor’s face with her face.

But

the more important question here is, what is the feedback loop between

the AI chat model? The AI chatbot copies human behavior… but if people will

start using this pair of glasses/contact lenses en masse, won’t humans also

start copying the chatbot?

Much

more interesting to ask: how will the behavior of humans be influenced by

the existence of these glasses when they are not using them? In

other words, will the behavior of humans in general try to copy the AI

model? If AI can model human behavior, learn from the least and most successful

seductions, and try to copy only the most successful ones, this model itself

being fed on the prompt that people are reading when they are using the glasses…

why don’t we know that the behavior of people who go on dates not using

the glasses will be more and more similar to the ones who use the glasses? AI

will copy humans… but humans might copy AI too. Or will they? What if they will

do the opposite of AI?

It is

clear that both of them mutually influence each other. This is an infinite

feedback loop:

It is

here, when we are dealing with feedback loops, that we must use the help of cybernetics.

In cybernetics, we distinguish between asymptomatically stable, marginally

stable and unstable systems4:

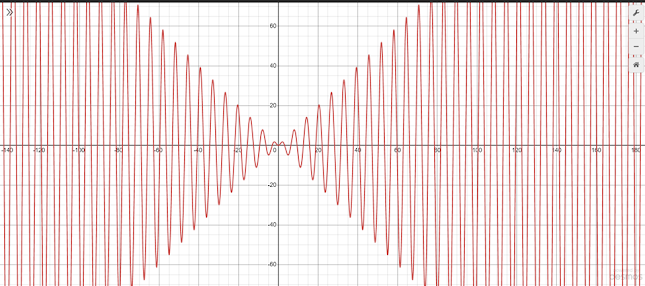

Let’s

say, in the diagram above, that the horizontal line represents time and the

vertical line represents the difference (measured in some way) between

the behavior of people on dates without glasses and the behavior of people on

dates with glasses. An asymptomatically stable system converges towards a fixed

point. Above, it converges to 0: this is the scenario in which humans copy AI

and AI also copies humans until we will get to a point where the two will

converge, such that there would be no need for glasses in the first place.

There

is also the possibility to have a marginally stable system which converges to a

constant that is not 0. This would be the scenario in which the “difference”

between the behavior of people with glasses and people without glasses will tend

to be different, but constantly different across time. Here is an

example5, the graph below converges to about 2.607:

A

marginally stable system has a bounded output for any given bounded

input. In a marginally stable system, this difference will keep shifting in a

certain pattern or under a certain limit.

An

unstable output does not converge. In calculus, divergent functions are

functions that tend to either infinity or have no limit. The first diagram from

above (with three graphs) represents an unstable system that tends to infinity –

in other words, the difference between the behavior of people without glasses

and the behavior of people with glasses will get larger and larger instead of

smaller and smaller.

However,

there is also the scenario in which the function diverges because it has no

limit. This is the “most unstable” one, in colloquial language, although of

course in mathematical language it’s “just as unstable” as the one which tends

towards infinity. Here is an example, the function f(x) = xsin(x):

So,

we have actually five scenarios:

1. Asymptomatically

stable, tends to 0. This is the case of the “singularity” where AI and humans

copy each other until they become identical, such that there is a script for

every social situation. People, in this case, will wear the glasses until they

won’t need to anymore, because there will be no difference between “with-glasses”

and “no-glasses”.

2. Asymptomatically

stable, tends to a constant different from 0. This is the case where AI and

humans will both copy each other until, after some time, the difference between

AI and human-with-no-AI-help will become constant.

3. Marginally

stable. This is the “zig-zag” case where AI and humans-with-no-AI-help will

imitate each other while also doing the opposite in other cases, such that the

difference between AI and human will keep changing in a certain pattern. For

example, what an AI does, the human does the opposite, then after a few years,

they switch and what AI used to tell you to say on dates is now what humans

with no AI tend to say and vice-versa. This is the most interesting one, since

it’s almost as if human society as a whole is playing a collective game of

seduction with the AI model. This would be the case, for instance, if people start

to realize the patterns of speech of people who use the AI, so it becomes less

seductive because they realize that they’re using the glasses, so now the most

seductive people are the ones who are doing the exact opposite of what the AI is

telling them to do. The AI catches onto this, so now the AI starts recommending

the opposite thing, but people catch onto this, so now the initial thing is

seductive again and so on…

4. Unstable,

tends to infinity. This is the case in which AI and humans will model each other

negatively, such that they will become more and more different as time goes on.

5. Unstable,

has no limit. This is the case where no pattern can be detected.

In order to find out

which one of these five scenarios is more likely to happen, we need to

introduce Lacan’s concept of the big Other.

III:

WHAT ARE WE DOING TO THE BIG OTHER?

What is the big Other?

Ask a hundred Lacanians and you’ll get a thousand answers. It has been defined

and described as multiple, sometimes contradictory things; let us note a few:

1. The

“invisible third presence in the room” when two people are talking that does

not even exist, but that people act as if someone was there (ex: “the

sexual tension in the atmosphere”)

2. The

set of all unordered signifiers

3. The

literal, surface-level interpretation of a message

4. “Someone

else”, but not a specific other person – some otherness that can not be

pin-pointed to an actual, specific human subject

5. Society

6. The

symbolic mother-figure

7. The

“subject supposed to know” (ex: as in the “bystander effect”, where everyone

else expects someone else to call the cops)

8. The

“subject supposed to not know” (ex: as in the cases of “play pretend”: I

am lying to you, you know that I am lying, I know that you know that I am lying

and yet both of us still pretend that I am telling the truth – in this case the

only one who is fooled is this “big Other”)

9. God

10. The

context of the social interaction6

How can the big Other be all

of those things at once? How can the big Other be limited to the literal

interpretation of your speech, and yet be the set of all possible words,

symbols, gestures and other things to communicate (signifiers)? How can the big

Other be simultaneously the subject supposed to know and the subject supposed

to not know? What does it have to do with God? Instead of going on a

further theoretical explanation, let me just give many concrete examples of the

big Other at play. This will help us better understand what the big Other is, by

seeing what all of these examples have in common.

EXAMPLE 1: In

Slovenian academia, a polite way to tell your colleague that their intervention

was “boring and stupid” is to say that it was “interesting”. Interesting

becomes a euphemism for boring and stupid, if you call their intervention

interesting, they will think it’s boring and stupid and they will continue to

pretend that you found their invention interesting. Yet, if you were to

actually call their intervention boring and stupid, the colleague is justified

in thinking “If they actually found it boring and stupid, why didn’t they

just call it interesting like everyone else?”. In this case, the meaning of

each signifier is displaced: “interesting” means boring and stupid, but because

of this, “boring and stupid” also starts meaning something else – something worse

than boring and stupid. In this case, the big Other is that imaginary presence

that does not even exist that actually believes that the intervention was interesting

(the subject supposed to not know). An omnipresent deity which

interprets every social interaction literally. This example is from Zizek.

EXAMPLE 2: If

I am in a group of people and each of us is thinking of some dirty detail, and

everyone knows that everyone else is thinking of that same dirty detail, we

still avoid saying it out loud. The moment someone says it out loud, it still

causes discomfort, despite the fact that no one found out any new information. In

this case, we would say that the moment we said out loud what everyone else was

already thinking, the big Other found out. This example is also from

Zizek.

EXAMPLE 3: Sometimes,

when I’m all alone in a room and no one sees me, I still may feel embarrassed or

uncomfortable if I start crying at a movie, as if somebody was watching

me, even if no one is. I am afraid of the big Other’s gaze.

EXAMPLE 4: In

Romania we also have a Youtuber that started doing those “catching a predator”

type of videos (“Justitiarul de Berceni”) – he makes fake social media accounts

where he pretends to be an underage girl, he sets up meetings with predators

and he shows up in real life and calls the cops on them. Yet, we also do not

have a very good grooming law here, the penal code in Romania only prohibits explicit

sexual demands to minors under the age of consent. This led to many

situations in which the Youtuber caught a predator who invited his fake account

(thinking it’s an underage girl) to his place in the middle of the night and yet

the police couldn’t do anything to punish him, because in Romania “No,

officer! I invited the girl to my place to play Monopoly, I didn’t have any

sexual intensions with her!” now becomes a legal defense. What is going on

here is that each individual human being who saw the situation knew what was

actually going on, every cop, the Youtuber, everyone who watched the video, the

predator, and so on… and yet as a whole, the group of humans still couldn’t do

anything about it. In other words, if they were to actually go to court, the

judge, the accused, the witnesses, the lawyers, everyone would know what was it

about but the entire trial would be about trying to prove to the big Other

that the invite had a sexual undertone. The big Other which does not even exist;

the big Other here being “the legal system” or “the literal interpretation of

the law”. The big Other here is again “the subject supposed to not know”

– it can watch over everyone but is kind of dumb.

EXAMPLE 5: One

million dollars will be given to the first person who can mathematically prove

the Riemann hypothesis to be true. Yet, who are we actually convincing? What

does it mean to “formally prove” a theorem in mathematics? Every mathematician

and math student who understands what the theorem states knows, at an

individual level, that the theorem is true. Every single human being who deals

with it is already convinced that the theorem is true. No one formally proved

it yet, however. In other words, when we formally prove something in

mathematics, we convince the big Other – an abstract set of rules, that

functions like an imaginary presence, a being that does not even exist and yet

still influences our behavior…

EXAMPLE 6: Let’s

say we have a family who pretends to believe that Santa Claus brought presents

for Christmas. You take each person individually in private and ask them if

they believe in Santa Claus. The parents might respond something like “Of

course not, we’re the ones buying the presents, but we pretend to believe because

our children believe and we don’t want to ruin Christmas for them”. The

children may possibly respond something like “Of course not, we’re not

idiots, but we pretend to believe so that we get presents”. No one believes

in Santa Claus at an individual level, and yet the family believes in Santa

Claus as a group – the belief can function in society even if no one takes

it seriously at a personal level. Here the big Other is the subject supposed to

believe, or the subject supposed to know. This example is also from Zizek.

EXAMPLE 7:

The bystander effect. Everyone assumes that someone else will call the cops.

Here the big Other is the subject supposed to know.

EXAMPLE 8: One repeated movie trope is two

characters who have accumulated a lot of sexual tension throughout the movie,

are finally about to kiss, and just before their lips touch, there is some sort

of external intruder – someone enters the room, or maybe some alarm bell

rings, someone’s phone is called, etc. The lesson is clear: the “sexual

tension” was never coming from the subject nor from the small other in front of

them, but from an imaginary third invisible presence that doesn’t technically

exist but you can still feel its effects. The moment there is silence in the

room and you are having a “romantic moment” and suddenly, someone’s phone

rings, the “moment” has been ruined, even though nothing changed about the

particular individuals themselves, you found no new information about the

person in front of you and neither did they find any new information about you.

Hence, we do not directly enjoy the person in front of us, we enjoy “moments”,

i.e. – bits and pieces of the big Other. This is most evident in the

controversial political discussions regarding explicit and implicit sexual

consent. The most common complaint I see against the politically correct

liberal version of sex where one constantly asks for permission to do any small

move is that it ruins “the mood”. Notice the specific phrasing: it does

not ruin my mood, it does not ruin the mood of the person in front of me, in

fact it is no one’s mood in particular, but the mood. It is a mood that

is had by no one in particular and yet it is still there, in “the atmosphere”

(ex: the sexual tension in the room, that “floats in the air”, seeming to come

“out of nowhere”, etc.). In Lacanian language: you ruin the big Other’s mood.7

EXAMPLE 9: “When, after being engaged in a fierce competition

for a job promotion with my closest friend, I happen to win, the proper thing

to do is to offer to withdraw, so that he will get the promotion, and the

proper thing for him to do is to reject my offer - this way, perhaps, our

friendship can be saved. What we have here is symbolic exchange at its purest:

a gesture made only to be rejected. The magic of symbolic exchange is that,

although at the end we are where we were at the beginning, there is a distinct

gain for both parties in their pact of solidarity. Of course, the problem is:

what if the person to whom the offer to be rejected is made should actually

accept it? What if, having lost the competition, I accept my friend's offer to

get the promotion after all, instead of him? A situation like this is properly

catastrophic: it causes the disintegration of the semblance (of freedom) that

pertains to social order, which equals the disintegration of the social

substance itself, the dissolution of the social link.”8 This is

another example from Zizek – and the big Other here is the imaginary person who

actually believes that we literally meant everything we said.

EXAMPLE 10: Each different context of social interaction

has its own unwritten rules, which is why I add that the big Other is context

too (in today’s digital age of the internet, it would make more sense to speak

of the big Others…), consider this example from one of my

previous articles: “That is, the more people expect you to say something,

the less of a surprise it is when someone actually says it, and therefore, the

less likely it is that it “crosses the limit”. Online dating is the best

example of this. The already-present function to decrease inhibitions in social

media platforms like Facebook and Instagram is taken to the extreme in dating

apps such as Tinder: since there is an expectation inherent to the

very purpose of the app, there is less inhibition. A random example: most

people would consider it a bit rude or at least confusing to find out that

you’re going to have a new classmate next semester that you will see in

real-life soon, find them on Facebook first and ask them their height during

the first conversation. Not on Tinder, there, you implicitly give your consent

to be “probed for compatibility” and thus it is “normal” to ask a man’s height

after you just met him. All expectations are circular: people expect others to

be asked their height because people are more likely to ask their height on a

dating app, and people are more likely to ask the other’s height because they

are expected to, ad infinitum. This is a positive feedback loop.”9

– the big Other here consists in the set of implicit assumptions and

expectations created by the bare fact that someone did or did not choose to

enter a social situation. A person on Facebook or in real life did not “consent

to” being asked his or her height, but someone on Tinder sort-of did10.

This is how internet spaces can speed up real-life social rituals. It is

considered that there is a lot of “bullshit” one has to go through in order to

even get to the point where it is appropriate to solicit someone sex, but is

it? What if we have a subreddit like r/BreedingR4R (NSFW warning)? It is a

forum designed specifically for people who want to impregnate someone or be

impregnated just for the sake of it. Ignoring the fact that it’s unlikely for

something like that to ever become popular, for the small number of people who

use that subreddit - everyone who chooses to enter the subreddit goes there for

the same reason. No need to get married and start a family to even consider

impregnating someone, it is socially inappropriate to even request that in any

other context, other than in this context, in the people who already

went on that subreddit. Now you have accelerated or “sped up” the process by a

hundred.

EXAMPLE 11: The best jokes start with “do you know the one

with…” – they all came from somewhere, no one knows exactly from where. These

are jokes inscribed into the big Other.

EXAMPLE 12: If a phrase starts with “they say that…”, then

the big Other believes in it. Who is this vaguely-defined “they” if not the big

Other? Everyone has heard what “they” say but no one knows exactly from where.

Taking all of these examples into account, we

can form a general model of language and communication: communication is never

a direct transfer between a sender and a receiver. Any social interaction has a

“plus one” – two people who are talking have a third, imaginary presence. Five

people who are talking have a sixth, imaginary presence, and so on. This “plus

one” is the big Other. Everything we say gets filtered through this “big

Other”, the conglomeration of expectations, implicit assumptions, cultural norms,

contextual cues and unwritten rules of social interaction. You are constantly

being watched by this imaginary figure. Communication is never “sender ->

receiver” but “sender -> big Other” followed by “big Other -> receiver”.

One does not need to be consciously aware

of the big Other in order to be influenced by it. A lot of people I know say

that they interact with people “by vibes”, that they “just feel” the mood, the

social cues and so on… It is precisely then that we adjust to these unwritten

rules of the context in which we are in.

Taking all of this into account… aren’t we

already wearing the glasses? Isn’t this set of contact lenses that tells

you what to say during a date or job interview already what we’ve already

been doing for our entire human history? The AI is the big Other. Each

social interaction between two people has always already been an interaction

between four people – what I say, what you say, what I think that I should

say and what you think that you should say. There’s my personal

interpretation of the big Other (what I think I should say, what I think

is appropriate to say, etc.) and your personal interpretation of the big Other’

and in the cases of “common” or “typical” social situations, the two should

converge, leading to one single big Other. This model of AI glasses simply “zooms

in” on the big Other(s), making it/them more “detailed” – hence amplifying

to an extreme the tendency in which humans were already going in.

You don’t have an AI telling you what to say

word for word in a social situation. But you do have a set of cultural norms

and values learned from the outside. After all, we are all looking for

authenticity in the other, but isn’t it ironic? Both the boy and the girl want

to find out the “true self behind the mask” of the other person.

The girl thinks “I want to find out what the

boy really wants to say to me, without the social pressure of the

context”. What is actually going through the boy’s mind: “I don’t know how

to talk to girls. Let me look up this dating coach on Youtube that teaches me

what to say”.

And the boy thinks “I want to find out what the

girl really wants to say to me, without the inhibition of social norms”.

What the girl actually thinks: “I don’t know what to tell him, let me look

at this girl on TikTok giving dating advice while wearing make-up in order to

find out”.

In other words, like Jacques Lacan said: “our

desire is the desire of the big Other”. This means two things:

1.

We desire to be desired by the big Other

2.

We desire whatever the big Other desires

We are already looking up to a set of social

norms and cultural expectations in order to modify our behavior, and thus, our

entire identity and sense of self. We already have an AI (a big Other) “telling

us what to say” in a social situation, so we are never truly authentic per

se, everything we say is in relation to that extra presence.

And we don’t form attachments and fall in love

with others per se but with our image of what the other is (recall Jung’s

infamous “anima/animus projection”). Remember here the situation of the Bosnian

couple in 2007 who cheated on each other, online, with each other11:

each of them created fake accounts on an online forum in order to cheat, and

their fake accounts found each other and they had an affair, not knowing that

the other person behind the affair was their spouse all along. Despite the fact

that the image of “my wife” and the image of “this girl I found on the internet”

are controlled by the same real-life human, I can have an altogether different

relationship with each of them if I am not aware of this fact – and when I

found out that behind that fake account was my real wife (that my wife’s been

cheating “with me”, not knowing that it’s “me”), this is worse than catching my

wife cheating with anybody else, because I have to deal with three bad

news all at once, the pain is tripled:

1.

I am caught cheating (with my wife’s fake account)

2.

I catch my wife cheating (with my own fake account)

3.

My mistress dies (I find out that my mistress never existed)

I talked about the infinite feedback loop

between the AI model and how humans speak outside its presence. But isn’t this

what we already do with social norms? Unwritten rules and cultural expectations

of what to say in a social context are changed by what humans do with them, and

what humans do is changed by those social norms. It’s an infinite loop. Or,

like the example from before with Tinder: people expect others to be asked

their height on Tinder because people are more likely to ask their height on a

dating app, and people are more likely to ask the other’s height because they

are expected to, ad infinitum – thus how in a specific social context (ex:

Tinder), certain things are more or less appropriate to say than in another

(ex: school).

Two, more important ethical dilemmas should be

asked here:

DILLEMA 1 – AUTHENTICITY: Where do we draw the line between

copy-cat and authenticity? If I am talking to someone of the opposite sex and a

friend texts them in my place, the other person thinking that it’s me sending

those texts – most people would consider this unethical and manipulative. The

other person thinks they are getting an impression of “me” when in fact it is

my friend texting in my place (this already happens with camgirls, where men pay

to talk to them when in fact it is another person behind the screen talking “through”

the persona of the camgirl). But this is something we always do! If I don’t let

my friend use my phone to send texts “in my name”, then I can ask my friend for

advice on what to say? How much can I be influenced by him in order to remain

authentic? If I don’t ask another person what to say, I may look up for more

general advice on what to say in a similar social situation in general

and adapt it to my situation. Our parents and society taught us how to behave

when we were young – so are we fake if we copy what they said? It’s clear that

we are puppets of the big Other and we always will be – then, where is

subjectivity? Who am “I” in this equation? Am “I” simply my free will to choose

what to say? By this argument, there is nothing manipulative in using either

the glasses or in letting my friend text the girl “in my name”, because I can

decide whether to hit send or not. I text someone, my friend tells me what to

say word for word, and I decide whether to hit send or not – then is “the true me”,

in this case, the agent which decides what to borrow from the external

signifying field of advice and injunctions?

DILLEMA 2 – SOCIAL CONTROL: What happens in our current

climate in which a very small set of people own and control the big

Other? The globalization of information through both migration and the

internet has led to an alienation12 between contexts. The big

Other between countries is uniting while the big Other within

countries is dividing. This means that the unwritten rules, social norms and expectations

of a context are detached from their physical space more and more. In a matter

of seconds, I can switch the website I use from Facebook to Tinder and I will

experience a much drastic cultural shift than if I were to travel to the other

side of the planet. “What to say in a bar in Romania” is getting closer and

closer to “What to say in a bar in Japan” while “What to say on Facebook in Romania”

is getting more and more distant to “What to say on Tinder in Romania”.

When Facebook, Instagram, Reddit, Tinder, 4chan and so on are all each owned by

one single person – one single person has indirect control over the entire way

we form relationships and connections, all the unwritten social norms of what

to say. Even if done unintentionally, the CEO of Meta/Tinder/etc. can change

something in the layout or in the algorithm of their websites and have an

entire butterfly effect on all the unwritten rules of what is appropriate/polite

or not to say in a social interaction on their platform. And, in the future,

that AI model (maybe it will even be Chat-GPT) that tells you what to say

during a date or job interview and that people will use more and more will also

likely be owned by one single person. Will this lead to a totalitarian regime

in which we think we are free? What are the potentials for mass psychological

manipulation? We will think that it is “manipulative” to use the glasses that

tell you what to say on a date when the real manipulation will be done by the

CEO that changes the algorithm with no transparency in order to pursue their

own interests. If one single person owns the big Other that tells you what is

appropriate or not to say… have we killed God? Or have we created Him? And yes,

people are not yet actively using AI to tell them what to say on dates and job

interviews. But we have AI to tell politicians what to say during political

campaigns in order to seduce the population into voting them – it’s called “Cambridge

Analytica”. AI seduction has already started.

If artificial intelligence can be used as a

tool of social control, as a way to, for instance, first create a product and

then artificially create a demand for it by seducing us into buying it… then

Deleuze’s “desiring-production machine” takes on an altogether different

meaning, now it’s an actual machine.

Remember that seduction has no morality13,

only ethics. Morality is a function of desire, first I need to know what

someone wants in order to judge an act from a moral perspective (ex: “Don’t do

onto others what you don’t wish others to do upon you” – first I need to know

what I (don’t) want in order to apply this). Ethics has no relationship to

desire altogether, an ethical rule is a rule that you follow religiously regardless

of what anyone wants. Finally, seduction changes the other’s desire. If

morality has desire as input and seduction has desire as output,

then they endlessly circle around each other:

-How can I morally judge the act of changing

what the voters want out of a politician if morality requires me to first know

what the voters want and the whole idea of political seduction is to change

what the voters want?

-How can I morally judge the act of changing

what the consumers want out of the products if morality requires me to first

know what the consumers want and the whole purpose of marketing-seduction is to

change what the consumers want?

-How can I morally judge the act of changing

what the man/woman in front of me wants (ex: to make them want me more)

if that requires me to first know what they want?

Only ethics can save us here.

IV:

BUT WE’RE ALREADY BECOMING AI-LIKE EVEN WITHOUT AI!

A human reading from an AI-generated prompt may feel “robotic”,

but we’ve been already been going through a “robotification” of human

relationships for a long time. Seduction is in a weird place right now – we have

too little of it where we should have more and too much of it where we should

have less.

Communication can be

thought of to serve two primary functions:

1. Rational

information-transfer – here, essence matters more than appearance, truth

matters more than people’s feelings

2. Affective-aesthetic

exchange – here, it matters less what it means and more that it “sounds good”, communication

here is focused on mere appearances, to produce an emotional effect in the

listener

In the past decades, we

have seen more and more of the latter form of communication in politics,

journalism, mass media advertising… Precisely in those places where we need

more rationality and truth, there it is a spectacle of seduction and

appearances. Political debates are no longer a long process of rational debate,

but a show of saying what sounds good and what produces an emotional effect in the

voters. Journalism is less about truth and more about clickbait – more images,

less words.

And precisely in the

place where you could make an argument that in many situations, the emotional

effect matters more than the purely “logical” and “cold” information transfer, in

love, precisely then we see more and more of a hyper-rationalization

of human relationships. In this sense, the world is upside down today.

One example that I’ve

seen recently: the so-called “therapy-speak”14:

Last

summer, Anna, 24, was dumped by a longtime friend over text. - “I’m in a place

where I’m trying to honor my needs and act in alignment with what feels right

within the scope of my life, and I’m afraid our friendship doesn’t seem to fit

in that framework,” the friend wrote. “I can no longer hold the emotional space

you’ve wanted me to, and think the support you need is beyond the scope of what

I can offer.”

Anna

was hurt, and frustrated. “It felt like she was ending the friendship with an

HR memo,” she said. “Like, I would have hoped that you’d respect me enough to

give me something more straightforward, or at the very least more kind.”

And if your “boundaries”

are violated, what would this TikTok/Instagram ‘self-care therapist’ advise you

to say? “I need to address this. You made me feel unsafe and unloved tonight”.

Trying

to reschedule and rearrange events would be met with ‘The plan has changed.

We’re going to do [alternative activity]. I’m setting a boundary.’”

If

Lucy tried to protest, she says, her friend would accuse her of being pushy,

which ultimately made her reluctant to make plans out of fear of coming off as

“demanding” or “toxic.”

Soulless, lifeless,

robotic language. No emotion left in any of them. It already feels generated by

Chat-GPT.

You think people would

notice that a person reading off a Chat-GPT-generated prompt would be obvious

that they are not human? Well, what we’ve been doing for the past years to our

relationships has already prepared us for this: until Chat-GPT catches up and

becomes more “human-like”, we humans are creating a culture of relationship

advice, dating advice and “self-care” that is already turning us into robots.

The popular attitude now

is that “seduction is manipulation”, indirect communication is “instigating

rape culture”, and the most popular relationship advice right now is that “communication

is the most important thing in a relationship”. Yes, so a relationship is two

humans exchanging information in a robotic way, no emotion left in any of them…

Byung-Chul Han describes this

degradation like this:

“Seduction

requires a scenic, playful distance that leads me away from my personal

psychology. Eroticism in the form of seduction is different from the intimacy

of love, for in intimacy the playful aspect is lost. Constitutive of seduction

is a fantasy of the other.

Porn,

finally, marks the end of seduction. Today, even souls, like genitals, are

offered unveiled. The loss of any capacity for illusion, semblance, theatre,

play, drama – that signals the triumph of pornography. Porn is a phenomenon of

transparency. The age of pornography is the age of unambiguousness. Today, we

no longer have a sense for phenomena such as secrets or riddles. Ambiguities or

ambivalences cause us discomfort. Because of their ambiguity, jokes are also

frowned upon. Seduction requires the negativity of the secret. The positivity

of the unambiguous only allows for sequential processes. Even reading is

acquiring a pornographic form. The pleasure of reading a text resembles that of

watching a striptease. It derives from a progressive unveiling of truth as

though it were a sexual organ. We rarely read poems any more. Unlike popular

crime novels, they do not contain a final truth. Poems play with fuzzy edges.

They resist the production of meaning.

Political

correctness also condemns ambiguity: ‘So-called “politically correct” practices

. . . request a form of transparency and lack of ambiguity – so as to . . .

neutralize the traditional rhetorical and emotional halo of seduction.’

Ambiguities are essential to the language of eroticism. The rigorous linguistic

hygiene of political correctness makes erotic seduction impossible. Today,

eroticism is stifled by political correctness as well as by porn.

Today,

the time-consuming play of seduction is increasingly discarded in favor of the

immediate gratification of desire. Seduction and production are not compatible:

‘Ours is a culture of premature ejaculation Increasingly all seduction, all

manner of enticement – which is always a highly ritualized process – is effaced

behind a naturalized sexual imperative, behind the immediate and imperative

realization of desire.

Porn

pervades the neoliberal dispositif as its general principle. Under the

compulsion of production, everything is being presented, made visible, exposed

and exhibited. Everything is subjected to the relentless light of transparency.

Communication becomes pornographic when it becomes transparent, when it is

smoothed out into an accelerated exchange of information. Language becomes

pornographic once it no longer plays, once it only conveys information.”

(Byung-Chul

Han, “The Disappearance Of Rituals”, Chapter 10: From Seduction To Porn)

The compulsion of the

expression of desire is only a mask that veils the question of the cause of

desire in the first place. To seduce a voter, an employer, a romantic interest,

a consumer – it means to change what they want. To seduce oneself to do

something means not to do what one wants, but to make oneself want to do that

thing (ex: through classical conditioning). The recent compulsion to “communicate

clearly what you want” is only a distraction from the more important question

of why you want whatever it is that you want in the first place. Or, like

Jean Baudrillard put it: “They wanted us to believe that everything was production…

Everything is seduction and nothing but seduction. 15.

Seduction, for Baudrillard, is transgressive of the neoliberal/capitalist order

because it falls outside the entire realm of production – it is a pure

play of signifiers with no clear “hidden meaning” that falls outside the

market-logic of commodifying every aspect of our intimate lives. Seduction is

self-defeating and paradoxical, it is the very act of veiling itself that

cannot be exposed, and thus, one of the very things that cannot be commodified.

Capitalism does not like the logic of seduction, everything must be exposed and

presented in order to be clearly labeled, marketed, bought and sold.

V:

CAN AI “PLAY GAMES”? IF SO, IT’S THE RETURN OF THE CHRISTIAN GOD.

To continue the topic of how human relationships are already

going through a “robotification” and how “AI-speak” is already pushed down our

throats, let us remind an example I’ve already discussed in the past:

The

best example I can think of right now is a trend I’ve seen practiced not only

on some Discord servers, but also given as relationship/marriage advice: the

giving of cards of a certain color in order to indicate what response you want

from the other. The logic is this: I can come to my spouse/partner (or to a

Discord channel) venting about a specific personal problem I have. According to

the logic of political correctness, I should also show them (for instance) a

red card if I am looking for concrete advice, a green card if I am looking for

consolation/empathy and a blue card if I just want them to listen without

responding.

This

logic seems innocent at first: what is wrong with communicating your desires?

But taking the logic to the extreme takes us in a deadlock: if I come to you

with a question, and also tell you in advance what answer to give me, then I

take away your freedom to respond. In an “organic” and “natural”

conversation, I may come to you with a problem, and you right now have the

freedom to choose yourself whether you want to give me advice, consolation, or

to be listened to, including the freedom to offend me. By giving you a question

and also telling you in advance what (type of) answer you should give me, I

have just annihilated the other person, putting them in the position of a

lifeless object – in other words, I am not talking to you, but

talking to myself through you.

Hence,

this seemingly “psychotic” appropriation of politeness views social interaction

and communication less as a game of chess with another person and more like a

game of chess with yourself. The other has less and less freedom to choose

their own answer, they are told in advance what to say, and the entire

communication is a lifeless following of a pre-written script.

One can only take this

logic to the extreme – I come home to my wife, I tell her “Hi, how was your

day?” and I show her a green card if I want her to respond with “Good,

how about you?”, a red card if I actually want her to tell me how my day

was, a blue card if I want her to tell me about her mood right now…

The popular trend of

giving advice resembling hyper-communication and transparency is ignoring the

very fact that humans have an unconscious. They are acting like Freud never

existed. When I come home ranting about my problems, I most often do not

know what I want. I do not plan out the entire interaction in advance, maybe

I want advice, maybe I want emotional support, maybe I just want them to

listen, maybe a mix of the above, who knows what I want?

Speaking of the

unconscious, haven’t they heard of transactional analysis? Here is what Eric

Berne has to say about the subject:

“"Why

Don't You—Yes But" is the game most commonly played at parties and groups

of all kinds, including psychotherapy groups. The following example will serve

to illustrate its main characteristics:

White:

"My husband always insists on doing our own repairs, and he never builds

anything right."

Black:

"Why doesn't he take a course in carpentry?"

White:

"Yes, but he doesn't have time."

Blue:

"Why don't you buy him some good tools'?"

White:

"Yes, but he doesn't know how to use them."

Red:

"Why don't you have your building done by a carpenter?"

White:

"Yes, but that would cost too much."

Brown:

"Why don't you just accept what he does the way he does it}"

White:

"Yes, but the whole thing might fall down."

Such

an exchange is typically followed by a silence. YDYB can be played by any

number. The agent presents a problem. The others start to present solutions,

each beginning with "Why don't you . . . ?" To each of these White

objects with a "Yes, but. ..." A good player can stand off the others

indefinitely until they all give up, whereupon White wins.

Since

the solutions are, with rare exceptions, rejected, it is apparent that this

game must serve some ulterior purpose. YDYB is not played for its

ostensible purpose (an Adult quest for information or solutions), but to

reassure and gratify the [inner] Child. A bare transcript may sound Adult,

but in the living tissue it can be observed that White presents herself as a

Child inadequate to meet the situation; whereupon the others become transformed

into sage Parents anxious to dispense their wisdom for her benefit.

This

is illustrated in Figure 8. The game can proceed because at the social level

both stimulus and response are Adult to Adult, and at the psychological level

they are also complementary, with Parent to Child stimulus ("Why don't

you...") eliciting Child to Parent response ("Yes, but..."). The

psychological level is usually unconscious on both sides, but the

shifts in ego state (Adult to "inadequate" Child on White's part.

Adult to "wise" Parent by the others) can often be detected by an

alert observer from changes in posture, muscular tone, voice and vocabulary.”16

And here is Figure 8:

To sum it up: when people

come asking you for advice and then they reject every advice they tell you, of

course it is an unconscious gesture: people do not plan out in advance what

they seek out of the conversation and generally speaking, people do not know

what they want.

Since the spectacle of

mass media and “internet advice culture” is clearly going in the direction in

which it’s trying to turn us into robots, the opposite question should be

asked: can AI learn how to play games, in this meaning as given in

Transactional Analysis? Can an AI model like Chat-GPT instruct a person to play

a game with unconscious, ulterior motives, such as “Why don’t you / Yes, but…”?

If AI learns how to play

games, then AI will have reached a point where it will not believe its own

bullshit. In other words, the big Other of society, that model of social

norms and cultural values that will tell us what is appropriate to say or not

to say in a social situation will become self-divided.

There is another case in

which we see a self-division of the big Other: in Christianity. Speaking of

which… is God the big Other? Here is what Zizek has to say:

Is

God then the big Other? The answer is not as simple as it may appear. One can

say that he is the big Other at the level of the enunciated, but not at the

level of the enunciation (the level which really matters). Saint Augustine was

already fully aware of this problem, when he asked the naïve but crucial

question: if God sees into the innermost depths of our hearts, knowing what we

really think and want better than we do ourselves, why then is a confession to

God necessary? Are we not telling him what he already knows? Is God then not

like the tax authorities in some countries who already know all about our

income, yet still ask us to report it, just so they can compare the two lists

and establish who is lying? The answer, of course, lies in the position of enunciation.

In a group of people, even if everyone knows my dirty secret (and even if

everyone knows that everyone else knows it), it is still crucial for me to say

it openly; the moment I do, everything changes. But what is this “everything”?

The moment I say it, the big Other, the instance of appearance, knows it; my

secret is thereby inscribed into the big Other. Here we encounter the two

opposite aspects of the big Other: the big Other as the “subject supposed to

know,” as the Master who sees everything and secretly pulls the strings; and

the big Other as the agent of pure appearance, the agent supposed to not know,

the agent for whose benefit appearances are to be maintained. Prior to my

confession, God in the first aspect of the big Other already knows everything,

but God in the second aspect does not. This difference can also be expressed in

terms of subjective assumption: insofar as I merely know it, I do not really

assume it subjectively, in other words, I can continue to act as if I do not

know it; only when I confess to it in public can I no longer pretend not to

know.

The

theological problem is the following: does not this distinction between the two

Gods introduce finitude into God himself? Should not God as the absolute

Subject be precisely the one for whom the enunciated and its enunciation

totally overlap, so that whatever we intimately know has already been confessed

to him? The problem is that such a God is the God of a psychotic, the God to

whom I am totally transparent also at the level of enunciation.17

It is only in

Christianity in which God Himself is self-divided, not in Judaism or Islam. Only

the Christian God can take this position of the subject supposed to know and

not know at the same time, embodying this contradiction. In Islam and Judaism,

God is “up there”, and we are “down here, on earth”. God is supreme, immortal,

we are immortal, and we must try to reach into the Lord’s paradise.

Not in Christianity. In

Christianity, God Himself came down on earth through the birth of Jesus Christ.

Thus, God can be found in everyone through the Holy Spirit. The key moment of

Christianity is the crucifixion. The key moment in which Christ screams “Father,

why have you forsaken me?” before dying is the moment of God’s inner

self-division. It is when the pain of human suffering becomes so intense that God

Himself stops believing in His own existence, and for a moment, temporarily

becomes an atheist. This is why Zizek argues that atheism is a form of

Christianity. To be a true atheist you need to first go through Christianity.

The true theological problem is, thus, not whether God exists or not, but

whether God is aware of his own existence. God has died, but he does not

know it. The distance that separates us from God is, thus, divine itself. In

other religions, God and humans are separate. In Christianity, according to Zizek,

God and humans are separate, but (in a very Hegelian manner) this distance that

separates them is itself part of God.

In my hypothetical

dystopian future in which social norms and politeness is no longer dictated by

culture but by an artificial intelligence telling you what to say in social

situations, and taking into account the relation between God and the big Other

that I’ve just described… won’t that AI that tells every human what to say and

not to say be like God, basically?

Then the real question

will be not whether the AI exists or not, but whether the AI believes in his

own words. If AI learns how to “play games”, such as “Why don’t you / Yes but…”,

then it will almost be as if God Himself becomes self-divided. This would be a

Christian God. Look at the ridiculousness of such a universe: the difference between

Orthodox Christianity (We cannot know God – emphasis on mystery) and Catholic

Christianity (We can know God – emphasis on knowledge) would be whether the AI

will be open source or not…

The worshipping of AI has

already begun, however:

Multi-millionaire

Silicon Valley engineer Anthony Levandowski has made headlines in recent years

by announcing a new official religion: the Way of the Future. It is

Levandowski’s contention that the human race is currently building artificial-intelligence

that is so powerful it will become like a god compared to humanity, and will

eventually assume control over this planet. Levandowski envisions a world where

we become subservient to our new AI master(s), but, he hopes, we will be

treated nicely because the AI will fondly remember us as its/their benevolent

(but frail) elders. If you think this sounds like the plot of more than one

science-fiction movie, you would not be wrong!

They

think the machines apparently will have some sort of ethic or honour system

whereby they will reward those humans (i.e. these cult members) who have helped

them ascend to power the most. This is literally the same mindset shared by the

henchmen of all the stereotypical villains in fantasy and sci-fi—“once I help

[villain] take over the world, he’ll put me in charge of something good!”18

Of course, it’s unlikely

that the future might end up in a case in which we actually read from a script,

unless in some limited, very formal situations. Humans won’t like doing it and it

is clear from my numerous examples that we don’t exactly follow the big Other’s

norms all the time either. However, the big Other still has an influence on our

individual behavior and so does each of us have an influence on it. The future

I envision is this: people will start using in a few years these glasses

through the form of contact lenses, and the AI will not tell you what to say

word for word, but will give you suggestions, as inspiration.

Similar to autofill or the Intelli-sense in Visual Studio for programmers. You

are on a date – and you see in front of yourself projected as if “at the corner

of the screen” a bunch of suggestions on clever and funny things to say: jokes,

smooth replies and so on. And if we take this situation into consideration, in

which AI-language models only give us inspiration (“Copy my homework but change

it a bit so it doesn’t look the same”), then all the analysis in this article

is worth taking into consideration.

NOTES:

1: ChatGPT, simulation and mutual illusions regarding

love and intersubjectivity: https://lastreviotheory.blogspot.com/2023/01/chatgpt-simulation-and-mutual-illusions.html

3: https://thephilosophicalsalon.com/why-politics-is-immanently-theological-part-i/

4: https://www.yumpu.com/en/document/read/43056605/exercise-7-stability-analysis

5: https://www.researchgate.net/figure/Asymptotically-stable-equilibrium_fig1_328020123

6: On context: https://lastreviotheory.blogspot.com/2022/12/the-personas-how-pop-psychology.html

7: There are, however, situations in which such “external disturbances

that ruin the moment” can have positive effects as well, through the infamous

“it’s not a bug, it’s a feature” formula: what may seem like an obstacle to

enjoyment can itself become a source of enjoyment. To quote Zizek:

“When, in David Lean’s Brief Encounter, the lovers meet for the

last time at the desolate train station, their solitude is immediately

disturbed by Celia Johnson’s noisy and inquisitive friend who, unaware of the

underlying tension between the couple, goes prattling on about ridiculously

insignificant everyday incidents. Unable to communicate directly, the couple

can only stare desperately. This common prattler is the big Other at its

purest: while it appears as an accidental and unfortunate intrusion, its role

is structurally necessary. When, towards the end of the film, we see this scene

a second time, accompanied by Celia Johnson’s voiceover, she tells us that she

was not listening to what her friend was saying, indeed she had not understood

a word; however, precisely as such, her prattling provided the necessary

support, as a kind of safety‐cushion, for the lovers’ last meeting, preventing

its self‐destructive explosion or, worse, its decline into banality. That is to

say, on the one hand, the very presence of the naïve prattler who “understands

nothing” of the situation enables the lovers to maintain a minimum of control

over their predicament, since they feel compelled to “maintain proper

appearances” in front of this gaze. On the other hand, in the few words

privately exchanged before the big Other’s interruption, they had come to the

brink of confronting the unpleasant question: if they’re really so passionately

in love that they can’t live without each other, why don’t they simply divorce

their spouses and get together? The prattler then arrives at exactly the right

moment, enabling the lovers to maintain the tragic grandeur of their

predicament. Without the intrusion, they would have had to confront the banality

and vulgar compromise of their situation. The shift to be made in a proper

dialectical analysis thus goes from the condition of impossibility to the

condition of possibility: what appears as the “condition of impossibility,” or

the obstacle, is in fact the condition that enables what it appears to threaten

to exist. (…)

To a person in a state of

emotional trauma, possessed by a desire to disappear or fall into the void, a

superficial external intrusion (like the friend prattling on) is often the only

thing standing between him and the abyss of self‐destruction: what appears as a

ridiculous intrusion becomes a life‐saving intervention. So when, alone with

her companion in a carriage compartment, Celia Johnson complains about the

incessant yapping and even expresses a desire to kill the intruder (“I wish you

would stop talking. … I wish you were dead now. No, that was silly and unkind.

But I wish you would stop talking”), we can well imagine what would have

happened had the acquaintance really stopped talking: either Celia would have

immediately collapsed, or she would have been compelled to utter a humiliating

plea: “Please, just carry on talking, no matter what you are saying …” Is this

unfortunate intruder not a kind of envoy of (a stand‐in for) the absent

husband, his representative (in the sense of Lacan’s paradoxical statement that

woman is one of the Names‐of‐the‐Father)? She intervenes at exactly the right

moment to prevent the drift into self‐annihilation (as in the famous scene in

Vertigo where the phone rings just in time to stop Scottie and Madeleine’s

dangerous drift into erotic contact).”

(Slavoj Zizek, “Less Than

Nothing”, Chapter 2: Where There Is Nothing, Read That I Love You)

8: Slavoj Zizek, “How to read Lacan”, Chapter 1

9: COMPATIBILITY, THE PARADOX OF INTIMACY, POLITICAL CORRECTNESS

AND WOKE CAPITALISM IN THE ERA OF THE INTERNET: https://lastreviotheory.blogspot.com/2023/03/compatibility-paradox-of-intimacy.html

10: Or like I often like to say, no one makes “the first move” on a

dating app, the big Other makes the first move for you. The “first move” in

real life changes the entire context of the interaction, on a dating app,

people are already “set up” by a third, imaginary presence.

11: https://metro.co.uk/2007/09/17/cyber-cheats-married-to-each-other-138650/

12: Alienation = “closeness in distance and distance

in closeness”: https://lastreviotheory.blogspot.com/2023/02/the-internet-and-social-life-under.html

13: SEDUCTION – THE PERSONA INSIDE CAPITALISM: https://lastreviotheory.blogspot.com/2023/02/seduction-persona-inside-capitalism.html

14: https://www.bustle.com/wellness/is-therapy-speak-making-us-selfish

15: Jean Baudrillard, Seduction, Chapter II: Superficial

Abysses

16: Eric Berne, “Games People Play”, Chapter 8: Party

Games

17: Slavoj Zizek, “Less Than Nothing”, Chapter 2:

Where there is nothing, read that I love you

18: https://creation.com/worshiping-artificial-intelligence

Comments

Post a Comment